How Torque Solves the Complexity Problem in Cloud Software Systems

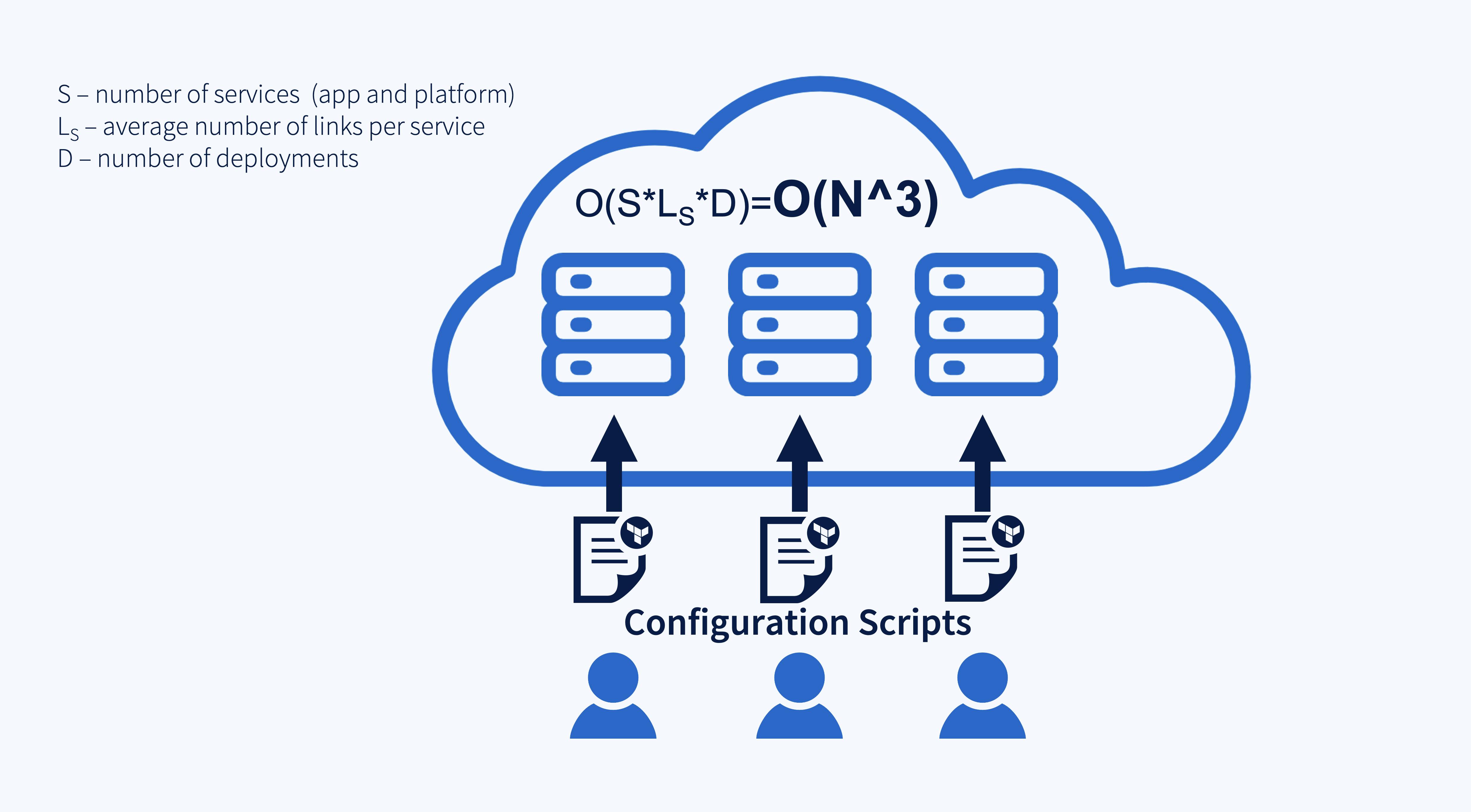

As a software developer, you know that the standard way of managing cloud systems is by copy-pasting code for each deployed service in every environment. Developers have to touch configuration parameters and scripts for each and every service and then each and every interconnected service in order to configure their cloud systems. The complexity of the system can be represented by the number of services multiplied by the number of links per service.

And complexity only multiplies with the number of deployments. The configuration for each deployment is handled independently. This means that even small changes can take more work over time.

Current tools do not solve this complexity problem

Now, you might say that we have Terraform, Kubernetes, and other great tools to help manage it all for us. While they do provide a number of benefits, they do not change the order of complexity. Developers still need to interact with each and every service, configuration parameter by configuration parameter. And all interconnected services. It is a cascading problem with an exponential level of complexity.

We must move to Loose Coupling

To reduce this complexity, Torque developed an open-source cloud framework that decouples system designs from specific deployments. We do that to reduce a system’s complexity from exponential to linear while also allowing any kind of complex configuration.

NOTE: Cloud frameworks are different than application frameworks. They serve the purpose of handling developer operations, not delivering application functionality. Therefore, it could be written in any language and handle developer operations for any application in any programming language.

Here’s how.

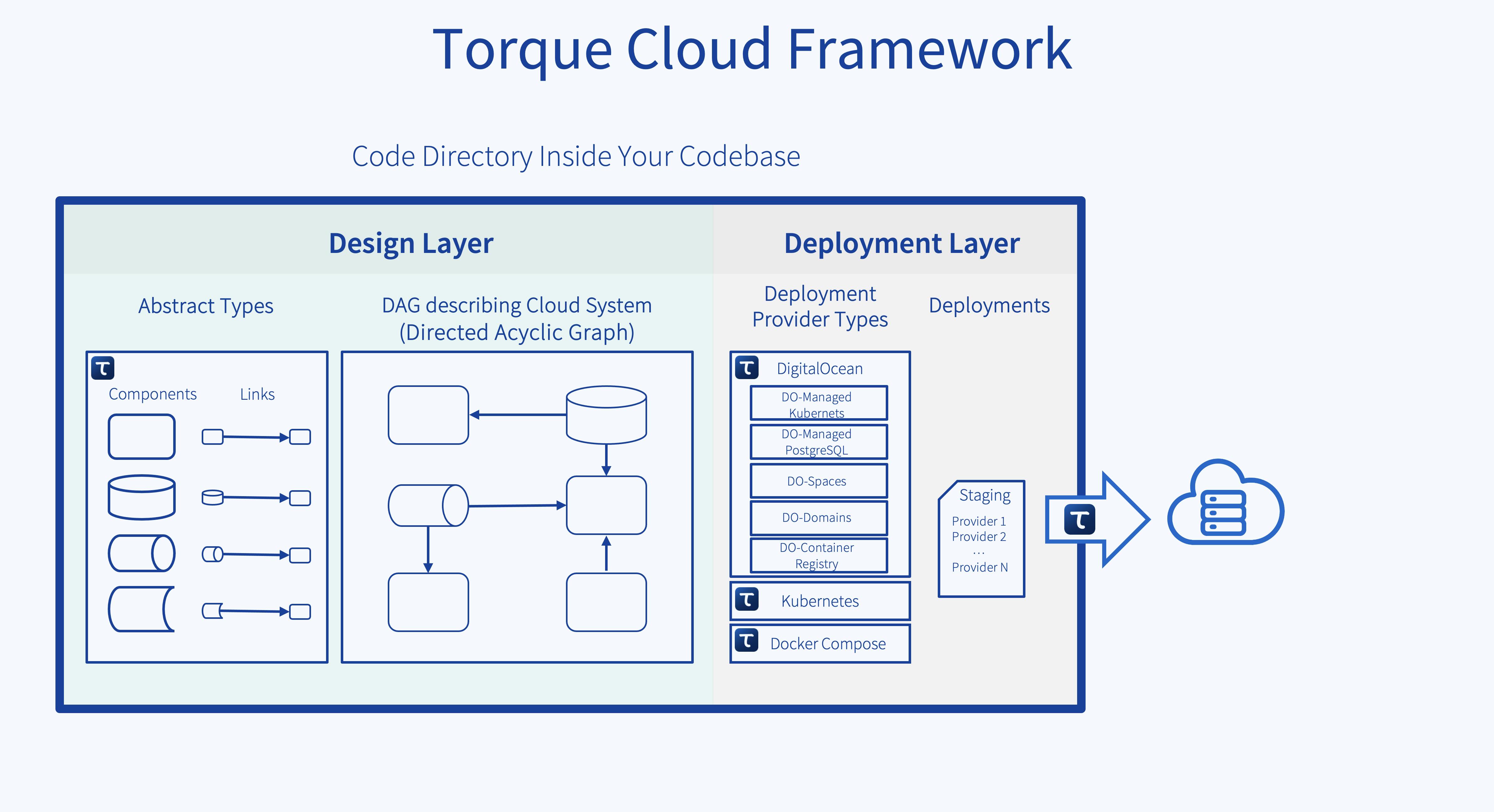

First, to help you imagine, it all comes as Python code that implements Torque’s framework as a directory in your codebase.

There are two layers: a layer that describes your system design and a layer for the deployment of the system. We'll explore how these layers work together to reduce the order of complexity from exponential to linear.

In the layer that describes your system design, we bring in abstract types that represent components, like application services in any programming language, databases, and queues, as well as links between these components. These are simply implementations of Python classes from the Torque Framework. Torque’s team provides a collection of Abstract Type implementations that you can find in our GitHub repository, but since this is code, you are not limited by what is currently available. These can be open source, or you can write your own.

Then, we use components and links to assemble the DAG (Directed Acyclic Graph) that represents our Cloud System. Currently, we use a CLI tool to describe and visualize the DAG.

Next comes the layer for the deployment of the system. Before we can deploy the DAG, we need to bring in code that will convert the DAG into specific configurations like YAML files, HCL scripts, or just by directly using a cloud provider API. For that, Torque gives you code that implements different deployment providers. Again, since this is code, you can use ones prepared by Torque’s team, or you can make your own. That means an expert who has been there many times can create a deployment provider type that anybody else can use.

And the last step before deploying, you’ll need to create a deployment object that is essentially a list of deployment providers that you want to use for deploying your DAG. You hand this directory to Torque Framework and tell it: torque deployment apply staging, and Torque Framework converts the DAG using code from deployment providers listed in the deployment object into cloud instances.

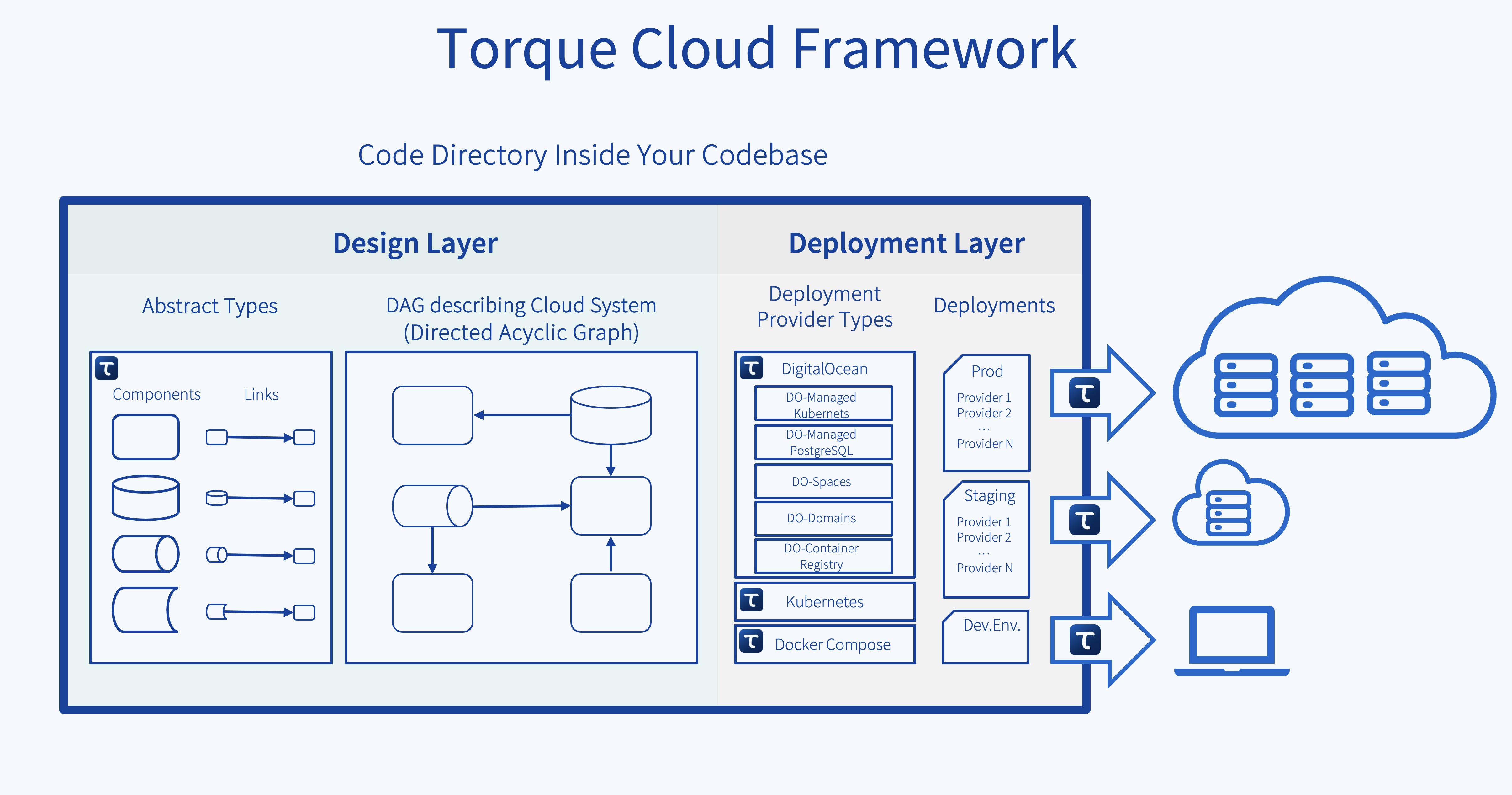

And now, we have come to the part where we can clearly see how the complexity of the system stays the same after we add an entirely new deployment, like production. All we need to do is create another deployment object with a list of production-grade deployment providers, and voila, one command and the production deployment is up and running.

Of course, the same goes for your development environment, too. That’s because, for Torque’s Framework, your development environment is just another deployment with Docker Compose deployment providers optimized for developer experience.

O(c + l + p + d) = O(N)

*c – number of components *

l – number of links

p – number of provider types

d – number of deployment objects

And that’s how the order of complexity in your cloud system can go from exponential to linear, making it much easier to manage, even as it grows.

I know this may have created a number of questions for you. Please, check out our GitHub, follow us here for more blog posts, or use our contact form at torque.cloud. We’d love to hear from you!

Image credit. Creative Commons Attribution-Share Alike 2.0 Generic license.